Automating deploys to Kubernetes using ArgoCD and jsonnet

So you have a Kubernetes-cluster and a bunch of applications. You trigger deploys by manually pushing templated yaml to your cluster. The codebase is large and the manual processes time-consuming and error-prone. You need a better way. Well, let me help you with an opinionated suggestion.

4 min read

·

By Gustav Karlsson

·

December 16, 2020

ArgoCD

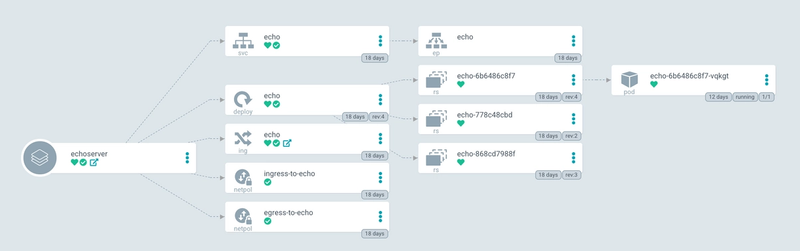

ArgoCD is a continuous delivery tool for Kubernetes that uses Git repos as the source-of-truth for the desired state of the cluster (following the GitOps philosophy). It runs inside the cluster and continuously monitor the Git repos for changes, rendering them into Kubernetes manifests and applying them to the cluster. So, contrary to the common pipeline approach where changes are pushed in, ArgoCD pulls them. As shown below, it comes with a UI for visualizing resources and their relations, which is particularly handy for newcomers to Kubernetes. (The UI may also be used to administer applications, repositories etc, but then you are engaging in ClickOps rather than GitOps).

The central component is the custom resource Application which basically is a pointer to a Git repo containing code that can be rendered into Kubernetes manifests. You may specify branch/tag, path, whether it should be automatically synced, inject parameters etc. See the doc (which is excellent btw) for an example showing all the knobs.

Deployment orchestration

Applications often consist of multiple components that need to be updated during a deploy, and sometimes these components have an internal ordering that must be honored. I.e. "first do this and then do that". Tools that were not built specifically for deploys are often lacking here. Luckily, ArgoCD solves this elegantly using resource hooks and sync waves.

App-of-apps

Finally, one of the gems of ArgoCD is the pattern which is referred to as App-of-apps:

An Application may point to a Git repo that renders additional Application CRs (again pointing to other Git repo), continuing in as long a chain as necessary.

So you can effectively bootstrap your entire stack by seeding ArgoCD with a single root Application that transitively renders all your Applications and underlying resources. No clicking required. You can of course also propagate relevant parameters such as <env> to all applications as needed.

Jsonnet

When it comes to selecting a tool/language for describing the Kubernetes-manifests there will be lots of opinions. This is currently mine:

I am a developer and I like to write code. I like to find the right abstractions and create generic, reusable pieces. I have always struggled with templating languages like Jinja2 or Go-templates because they are so limited and always "get in my way". After reading Using Jsonnet does not have to be complex and Why the f**k are we templating yaml? I tried out jsonnet. I had heard it was a bit complex, but my initial skepticism was blown away after trying it. It felt much more familiar than awkward templating languages and factoring the code into reusable components was very easy (see for example bitnami/kube-libsonnet).

In jsonnet, it is quite simple to build a library allowing you to generate the full stack of Kubernetes objects (deployment, service, network policies, ingress, service monitor, etc) just by declaring the stack's config. Something similar to what you would do in a Helm values-file, but achieved with code rather than templating. Below is an example of what that might look like.

local lib = import 'lib/v2/lib.libsonnet';

# top-level arguments (tlas) can be injected here:

function(name='echo-server', namespace='default', env)

local vars = lib.loadVars(env);

(import 'lib/v2/app.libsonnet') {

# here we are patching the default _config:

_config+: {

name: name,

namespace: namespace,

imageName: 'hashicorp/http-echo',

imageTag: vars.tag,

userPort: 8080,

replicas: vars.replicas,

ingress+: {

path: '/echo/(.*)',

rewrite: '/$1',

tlsSecret: 'tls-secret-name',

},

},

}.newAppAsList()If done right, all resources can still be fully patchable. And since the jsonnet code is rendered into JSON which Kubernetes speaks natively, it will (like YAML) still allow you to do stuff like:

Validate the resources:

Dry-run against a cluster:

Diff against a cluster:

This is of course typically handled by ArgoCD, but it might still be useful as development tools.

Putting the pieces together

Using the app-of-apps pattern referred to above, it is possible to seed the ArgoCD-installation with a single Application which will transitively render all Applications for the system. It might look something like:

argocd-root # git repo

apps/

lib/ # libraries, managed by jsonnet-bundler or via git submodules

vars/

vars/test.libsonnet # per-env vars for tracking-branch, autosync, tlas etc

apps.jsonnet # renders all Applications according to vars/<env>.libsonnet, injecting tla for env etcThe root-repo will render the echoserver Application and propagate relevant tlas such as <env>. That repo will in turn render the app-specific Kubernetes-manifests. It might look something like:

argocd-apps # git repo

apps/

echoserver/

lib/ # libraries, managed by jsonnet-bundler or via git submodules

vars/

vars/test.libsonnet # per-env vars for imageTag, replicas etc

config/ # static configuration that goes into configmaps etc

echoserver.jsonnet

otherapp/...

anotherapp/...New Applications are then added by pushing changes to argocd-root and deployments are triggered by pushing changes to argocd-apps.

Voilà! No more manual deploys and hopefully a tidier codebase 🎉.

Up next...

Loading…

Loading…

Loading…

Loading…